This section describes how to set up a PostgreSQL gateway 2.0 instance. See the migration guide if you’re migrating from a previous version.

Before you start

Make sure you have registered the Cognite API and the CDF application in Microsoft Entra ID and set up Microsoft Entra ID and CDF groups to control access to CDF data.Step 1: Register an app in Microsoft Entra ID to use with PostgreSQL gateway

Sign in to Azure portal

Sign in to the Azure portal as an admin.

Select tenant

If you have access to multiple tenants, use the Directory + subscription filter in the top menu to select the tenant in which you want to register an application.

Register new application

Under Manage, select App registrations > New registrations.In the Register an application window, enter the app name, and then select Register.

Copy application ID

Copy and make a note of the Application (client) ID. This value is required to create user credentials for the PostgreSQL gateway.

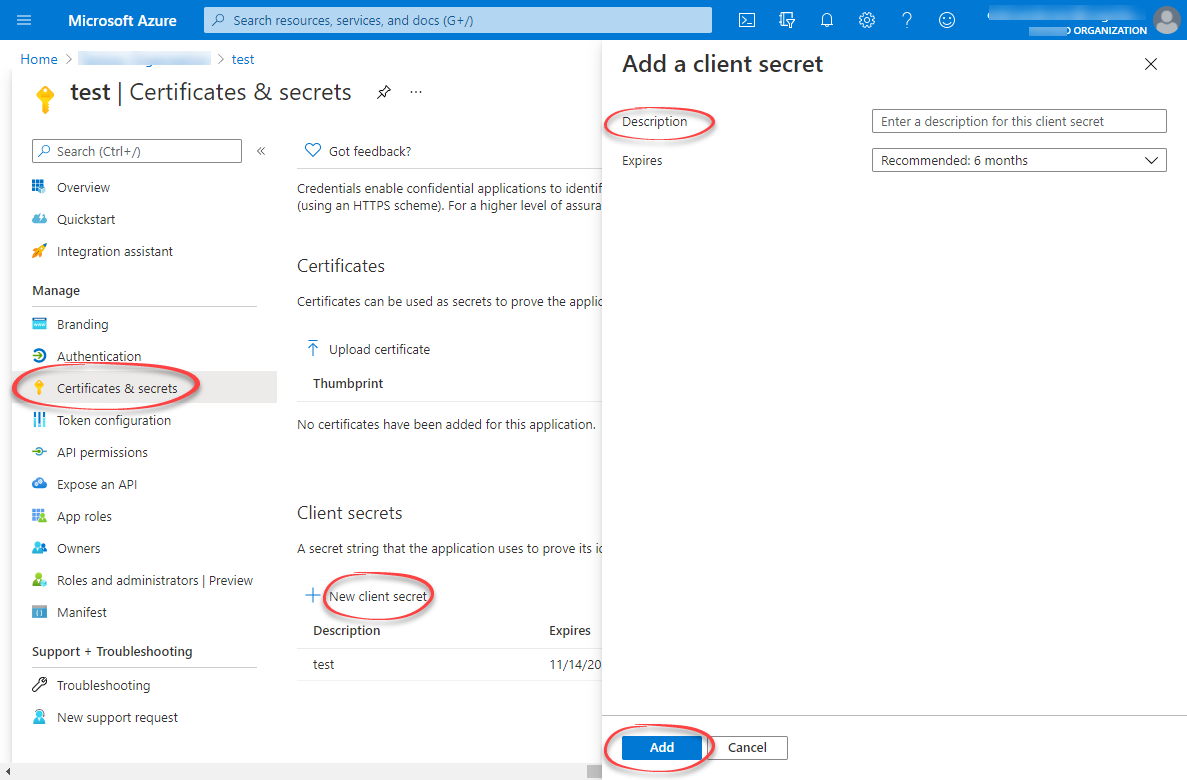

Create client secret

- Under Manage, select Certificates & secrets.

- Select New client secret.

- Enter a client secret description.

- Select an expiry time.

- Select Add.

Step 2: Create a group in Microsoft Entra ID and add the registered app as its member

Create group

Create a group, read more here.

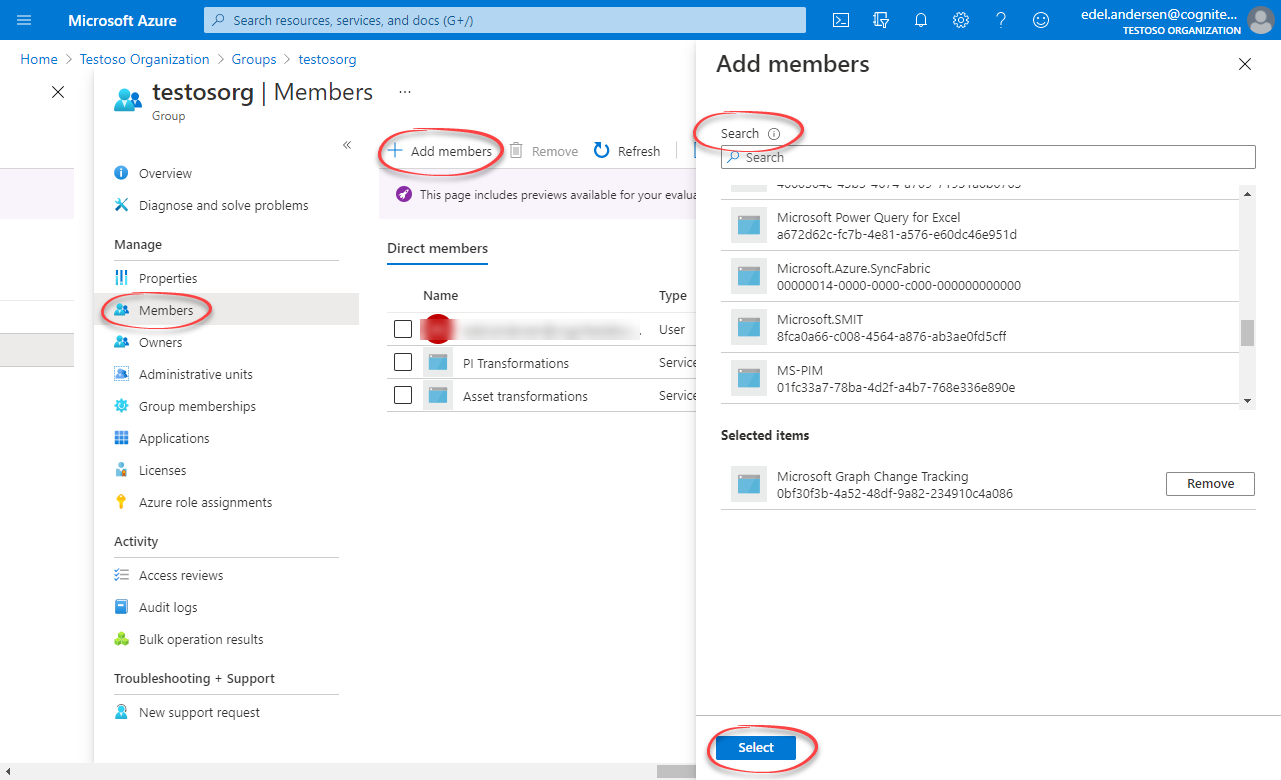

Add app as member

- Open the group. Under Manage, select Members > Add members.

- Find the app you created above and click Select.

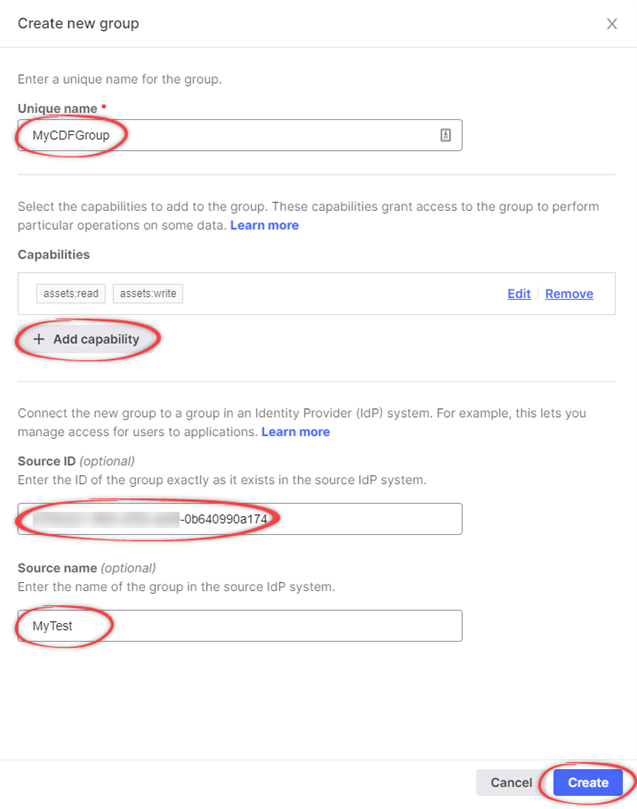

Step 3: Create a group in CDF and link to the Microsoft Entra ID group

Add capabilities

Add relevant capabilities. The minimum required capabilities are:

postgresGateway:READpostgresGateway:WRITEproject:listgroups:listsession:CREATE

read and/or write capabilities for the CDF resources you want to ingest data into. For instance, if you’re ingesting assets, add asset:read and asset:write.If you revoke the capabilities in the CDF group, you also revoke access for the PostgreSQL gateway.

Link to Microsoft Entra ID group

Link the CDF group to a Microsoft Entra ID (ME-ID) group:

- In the Source ID field, enter the Object Id for the ME-ID group exactly as it exists in ME-ID.

- In the Source name field, enter the name of the group in Microsoft Entra ID.

Step 4: Create user credentials for the PostgreSQL gateway

Send POST request

Send a POST request to

https://{cluster}.cognitedata.com/api/v1/projects/{project}/postgresgateway API.Where:

clusteris where your CDF instance exists. If you don’t know the cluster name, contact Cognite support.projectis the organization name of your CDF instance.

Get Bearer token

Get a Bearer token from your identity provider for authentication. To get a bearer token from Postman, see the illustration below.

Get session nonce

Get the sessions nonce using the Cognite API using the Bearer token obtained from above.

Migrate to PostgreSQL gateway 2.0

The PostgreSQL gateway 2.0 replaces the previous version (1.0). As indicated in Step 4 above, this version usessessions on behalf of your project and OIDC authentication to make requests to CDF.

Most of the tables remain the same, but may have columns added or renamed. Some tables have been changed or divided up for clarity.

These modifications are highlighted below:

Assets

Table name: assets Added| Columns | Type |

|---|---|

created_time | TIMESTAMPTZ |

last_updated_time | TIMESTAMPTZ |

Event

Table name: events Added| Columns | Type |

|---|---|

created_time | TIMESTAMPTZ |

last_updated_time | TIMESTAMPTZ |

Extraction pipeline runs

Table name: extraction_pipeline_runs Added| Columns | Type |

|---|---|

id | BIGINT |

Extraction pipelines

Table name: extraction_pipelines Added| Columns | Type |

|---|---|

contacts | JSON |

raw_tables | JSON |

documentation | VARCHAR(10000) |

last_updated_time | TIMESTAMPTZ |

created_by | VARCHAR(255) |

Files

Table name: files Added| Columns | Type |

|---|---|

directory | VARCHAR(512) |

asset_ids | BIGINT[] |

source_created_time | TIMESTAMPTZ |

source_modified_time | TIMESTAMPTZ |

security_categories | BIGINT[] |

created_time | TIMESTAMPTZ |

last_updated_time | TIMESTAMPTZ |

Labels

Table name: labels Added| Columns | Type |

|---|---|

data_set_id | BIGINT[] |

created_time | TIMESTAMPTZ |

Sequences

Table name: sequences Added| Columns | Type |

|---|---|

created_time | TIMESTAMPTZ |

Sequence rows

Table name: sequence_rows Changed| Old | New |

|---|---|

row_values ⇾ values | JSON ⇾ JSON |

Datapoints

Thedatapoints table has been divided into 2 different tables because version 1.0 only supported double datapoints. These are string_datapoints and double_datapoints.

It includes the following columns:

| Columns | Type |

|---|---|

value | VARCHAR(255) for string_datapoints DOUBLE PRECISION for double_datapoints |

timestamp | TIMESTAMPTZ |

external_id | VARCHAR(255) |

id | BIGINT |

Timeseries

Thetimeseries table has been renamed to time_series.

Removed

The legacy_name field has been retired and is no longer available for use.

Raw

Theraw table is renamed to raw_rows for better understanding and proper differentiation between raw table data sets and raw table names.

raw_rows

Added

| Columns | Type |

|---|---|

_raw_last_updated_time | TIMESTAMPTZ |

| Columns | Type |

|---|---|

database ⇾ _raw_database | VARCHAR ⇾ VARCHAR(32) |

table ⇾ _raw_table | VARCHAR ⇾ VARCHAR(64) |

key ⇾ _raw_key | VARCHAR(1024) ⇾ TEXT |

columns ⇾ _raw_columns | JSON ⇾ JSON |

| Columns | Type |

|---|---|

_raw_database | VARCHAR(32) |

_raw_table | VARCHAR(64) |