3.6 * TS{externalId='wind-speed'} to convert units from m/s to k/h.

Also, you can combine time series: TS{id=123} + TS{externalId='my_external_id'} / TS{space='data modeling space', externalId='dm id'}, use functions with time series sin(pow(TS{id=123}, 2)), and aggregate time series TS{id=123, aggregate='average', granularity='1h'}+TS{id=456}. See below for a list of supported functions and aggregates.

Functions

Synthetic time series support these functions:- Inputs with internal ID, external ID, or data modeling instance ID (space and externalId). Single or double quotes must surround the external ID and space.

- Mathematical operators +,-,*,/.

- Grouping with brackets ().

- Trigonometrics. sin(x), cos(x) and pi().

- ln(x), pow(base, exponent), sqrt(x), exp(x), abs(x).

- Variable length functions. max(x1, x2, …), min(…), avg(…).

- round(x, decimals) (

-10<decimals<10). on_error(expression, default), for handling errors like overflow or division by zero.map(expression, [list of strings to map from], [list of values to map to], default).

Functions like

avg(x1, x2, ...), min(...), and max(...) compute values across multiple inputs at each point in time. They don’t aggregate a single time series across a time range. To aggregate over time, use the aggregate parameter instead, for example, ts{id=123, aggregate='average', granularity='1h'}.Convert string time series to doubles

Themap() function can handle time series of type string and convert strings to doubles. If, for example, a time series for a valve can have the values "OPEN" or "CLOSED", you can convert it to a number with:

"OPEN" is mapped to 1, "CLOSED" to 0, and everything else to -1.

Aggregates on string time series are currently not supported. All string time series are considered to be stepped time series.

Error handling: on_error()

There are three possible errors:- TYPE_ERROR: You’re using an invalid type as input. For example, a string time series with the division operator.

- BAD_DOMAIN: You’re using invalid input ranges. For example, division by zero, or sqrt of a negative number.

- OVERFLOW: The result is more than 10^100 in absolute value.

on_error() function, for example. on_error(1/TS{externalId='canBeZero'}, 0). Note that this can happen because of interpolation, even if none of the RAW data points are zero.

Aggregation

You define aggregates similar to time series inputs, but aggregates have extra parameters:aggregate, granularity, and (optionally) alignment. Aggregate must be one of interpolation, stepinterpolation, or average.

You must enclose the value of aggregate and granularity in quotes.

Granularity

The granularity decides the length of the intervals CDF takes the aggregate over. they’re specified as a number plus a granularity unit, like120s, 15m, 48h, or 365d.

Intervals are half-open, including the start time and excluding the end time. [start, start+granularity)

Granularity must be on the form NG where N is a number, and G is s, m, h, or d (second, minute, hour, day). The maximum number you can specify is 120, 120, 100.000, and 100.000 for seconds, minutes, hours, and days, respectively.

Output granularity

We return data points at any point in time where:- Any input time series has an input.

- All time series are defined (between the first and last data point, inclusive)

Interpolation

If there is no input data, CDF interpolates. As a general rule, CDF uses linear interpolation: finds the previous and next data point and draws a straight line between these. The other case is step interpolation: CDF uses the value of the previous data point. CDF interpolates any interval. If there are data points in 1971 and 2050, CDF defines the time series for all timestamps between. Most aggregates are constant for the whole duration of granularity. The only exception is the interpolation aggregate on non-step time series.Alignment

The alignment decides the start of the intervals and is specified in milliseconds. Thealignment parameter lets you align weekly aggregates to start on specific days of the week, for example, Saturday or Monday. By default, aggregation with synthetic time series is aligned to Thursday 00:00:00 UTC, 1 January 1970, for instance, alignment=0.

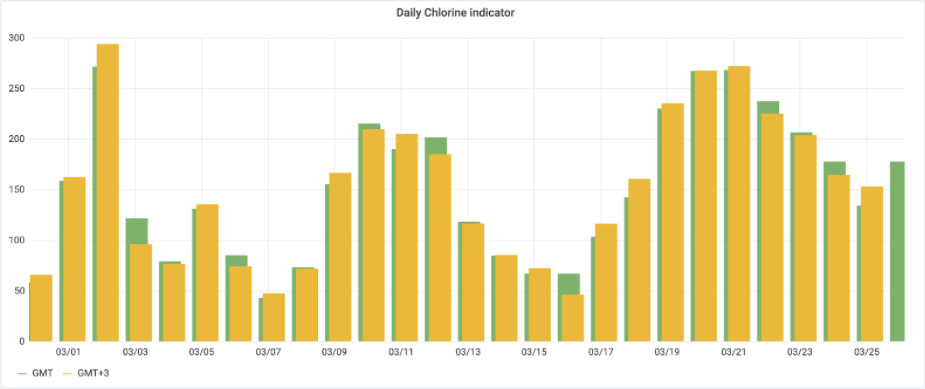

You can also use alignment to create aggregates that are aligned to different time zones. For example, the chart below shows the daily average chlorine indicator for city water aligned to two different time zones: GMT+0 and GMT+3.

-

To align to UTC/GMT+0 (aligned to: Sep 09 2020 00:00:00 UTC)

ts{externalId='houston.ro.REMOTE_AI[34]', alignment=1599609600000, aggregate='average', granularity='24h'} -

To align to UTC/GMT+3 (aligned to: Sep 09 2020 03:00:00 UTC)

ts{externalId='houston.ro.REMOTE_AI[34]', alignment=1599620400000, aggregate='average', granularity='24h'}

(N*granularity + alignment, (N+1)*granularity + alignment), where N is an integer.

Alignment differences from regular aggregates

Synthetic time series and regular data point retrieval align aggregate intervals differently:| Aspect | Regular data point retrieval | Synthetic time series |

|---|---|---|

| Alignment | Aligned to the query start time, rounded down to the granularity unit. | Aligned to epoch (midnight January 1, 1970 UTC) by default. |

| Override | Not configurable. | Use the alignment parameter. |

Time zone

ThetimeZone parameter controls how calendar-based granularities (day, month) are interpreted. Specify it at the query level (not per-expression) using any IANA time zone identifier, such as Europe/Oslo or America/New_York.

UTC.

Unit conversion

If a time series has a definedunitExternalId, you can convert its values to a different unit within the same quantity.

targetUnit or targetUnitSystem, but not both. The chosen target unit must be compatible with the original unit.

When querying data points using synthetic time series, the values will no longer retain their

unit information.

For instance, despite it not being physically accurate, it is possible to add values from a temperature time series to those from a distance time series.

Limits

In a single request, you can ask for:- 10 expressions (10 synthetic time series).

- 10.000 data points, summed over all expressions.

- 100 input time series referrals, summed over all expressions.

- 2000 tokens in each expression. Similar to 2000 characters, except that words and numbers are counted as a single character.