Power BI template

To get started quickly, you can use a Power BI template file (.pbix) that includes all the utility functions and helper functions documented on this page. It also includes additional functions for incremental refresh. The template saves you from manually copying and pasting each function into your Power BI report.

Download Power BI Template

Download the template pbix file to get started with all utility functions pre-configured.

What’s included

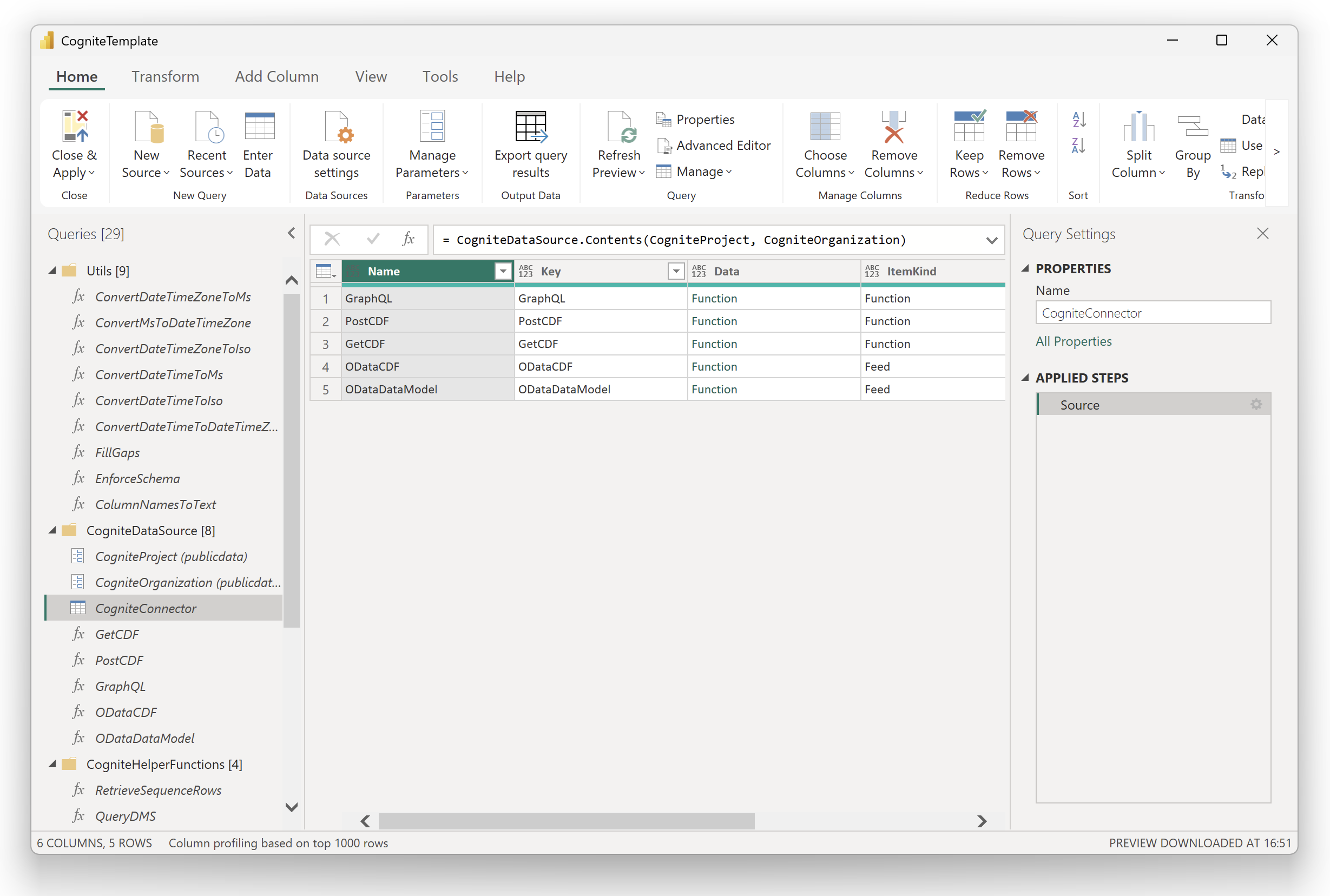

The template contains the following components:

| Folder | Description |

|---|---|

| Utils | Utility functions: timestamp conversion (ConvertDateTimeZoneToMs, ConvertMsToDateTimeZone, ConvertDateTimeZoneToIso, ConvertDateTimeToMs, ConvertDateTimeToIso, ConvertDateTimeToDateTimeZone), gap filling (FillGaps), schema enforcement (EnforceSchema), and column name conversion (ColumnNamesToText). For detailed information, see utility functions and incremental refresh. |

| CogniteDataSource | Pre-configured connection to the Cognite REST API Power BI connector with CogniteProject and CogniteOrganization parameters ready to update. |

| CogniteHelperFunctions | Helper functions: RetrieveSequenceRows, QueryDMS, RetrieveDataPoints, and RetrieveDataPointsMultipleTs. |

| Other Queries | Pre-configured RangeStart and RangeEnd parameters for incremental refresh, along with derived variables (RangeStartMs, RangeEndMs, RangeStartDtz, RangeEndDtz, RangeStartIso, RangeEndIso) ready to use with any connector function. |

Getting started with the template

Update connection parameters

Select Transform Data to open the Power Query editor. Update the

CogniteProject and CogniteOrganization parameters with your CDF project name and organization.Use the template as a companion file and refer to the connector functions, this page, and the incremental refresh documentation for detailed explanations, parameters, and usage examples.

Utility functions

Convert timestamps from/to epoch

CDF resource types expect and return timestamps using milliseconds since the Unix epoch. Power Query doesn’t have methods to automatically parse this format to adatetimezone type to represent timezone-aware timestamps. CDF data models represent timestamps using the ISO 8601 format for primitive fields.

Use the functions below to convert between a datetimezone variable and milliseconds since the Unix epoch and from a datetimezone variable to text in ISO 8601 format.

ConvertDateTimeZoneToMs

ConvertDateTimeZoneToMs

Convert datetimezone to milliseconds since epoch

ConvertMsToDateTimeZone

ConvertMsToDateTimeZone

Convert milliseconds since epoch to datetimezone

ConvertDateTimeZoneToIso

ConvertDateTimeZoneToIso

Convert DateTimeZone to ISO 8601 text representation

Fill gaps in time series data

This function creates a uniform time index and fills missing values using forward fill or linear interpolation. It’s used byRetrieveDataPoints when gap filling is enabled.

FillGaps

FillGaps

Fill gaps in time series data

Add function

To add a new function in Power Query, select Get Data > Blank Query and write your function or copy one of the functions below.Time deltas

It’s common to define start and end timestamps based on time deltas. The values will be updated when a dataset is refreshed. In the example below,EndTime is the current time, and StartTime is 7 days before EndTime. You can adapt this example to use different time deltas.

Time deltas

Common GET request

When you fetch data from CDF using theGetCDF function from the CDF REST API connector for Power BI, you must use query parameters to pass on filters to select which data to fetch.

The example below shows how you can add the externalIdPrefix and limit query parameters from the /timeseries endpoint to the URL to filter data on the server side.

List all time series instances with an externalId starting with a specific string

Common POST request

When you fetch data from CDF with thePostCDF function, you must write a request body to select which data to fetch. The function accepts a text representation of the JSON body, but you can also write the body using a Power Query record data type and then convert it to a JSON text data type before passing the value to the PostCDF function.

List all data modeling instances for a view using the DMS API

") with another set of double-quote characters:

ListInstancesDMS, you can use it in a new query by entering the field values in Power Query or by writing a new query:

GraphQL requests

When you fetch data from CDF using theGraphQL function, you must write a GraphQL request to select which data to fetch from a specific data model. The function expects you to specify the external ID of the space, the external ID of the view, the version of the view, the GraphQL query to run, and optionally a set of variables to be used in the query.

The query below uses the GraphQL syntax and passes the variables as JSON texts. Using variables in the query makes it easier to parameterize and use with external values.

List all work orders with an end date greater than a specific date

#(lf) represents a line-break character. In the example above, the query was pasted into the text area field in Power BI and the variables were passed as JSON text. Notice how Power BI added the line breaks to the original query and expressed it as a single-line text variable.

Alternatively, you can write the query directly as a multi-line text variable. You can define the variables as Power Query records and convert them to JSON text before passing them to the GraphQL function. For example, see how the ConvertDateTimeZoneToIso function converts a datetimezone variable to a text representation of the ISO 8601 format and then passes it as a variable to the query.

Advanced examples

Depending on the shape of the Cognite API response, you may need additional Power Query transformations to fetch and transform the data. Copy and adapt the examples below to fit your business needs.Fetch sequence rows with the PostCDF function

The Power Query function below fetches and processes sequence rows data for a sequence external ID from CDF. It sends a POST request, extracts column information, expands nested response data, and reorganizes it into tabular format. The function handles data type conversion, removes unnecessary fields, and groups data by row. The final output is a well-structured table with correctly typed columns.

RetrieveSequenceRows function

RetrieveSequenceRows function

Fetch sequence rows with PostCDF

Fetch instances from the DMS query endpoint with the PostCDF function

The Power Query function below retrieves and processes data modeling instances for a DMS query. It paginates through the response, extracts the instances, and expands the nested data.

QueryDMS function

QueryDMS function

Fetch instances from the DMS query endpoint with PostCDF

Fetch time series datapoints with the PostCDF function

The Power Query function below retrieves and processes aggregated time series datapoints for a time series within a time range. It converts local timezone inputs to UTC for the CDF API requests, supports multiple aggregates and custom granularity, and handles data pagination. The function then converts the returned UTC timestamps back to the local timezone, expands the nested API response, and outputs a well-formatted table with properly typed columns. It also includes local timestamps and decimal aggregate values.

The function also supports optional client-side gap filling. Use the fillGaps parameter to create a uniform time index and fill missing values.

| Fill mode | Description |

|---|---|

"ffill" | Forward fill: carries the last known value forward until a new value is encountered |

"linear" | Linear interpolation: calculates intermediate values based on time-weighted position between known values |

null | No gap filling (default behavior) |

ConvertDateTimeZoneToMs and ConvertMsToDateTimeZone functions are used to convert timestamps. To enable gap filling, add the FillGaps utility function.

RetrieveDataPoints function

RetrieveDataPoints function

Fetch time series datapoints with PostCDF

RetrieveDataPointsMultipleTs function

RetrieveDataPointsMultipleTs function

Fetch multiple time series datapoints with PostCDF

"ffill" or "linear" as the last parameter:

RetrieveDataPointsMultipleTs to iterate over a list of time series external IDs:

Learn more: Microsoft Power Query documentation